Africa Check exists to promote accuracy in public debate and the media in Africa.

On a continent of 55 countries, tens of thousands of media houses, hundreds of thousands of competing organisations and several hundred million people, it is not possible – or desirable – for one organisation to set itself up to fact-check every claim made.

But happily for our team, that’s not our aim.

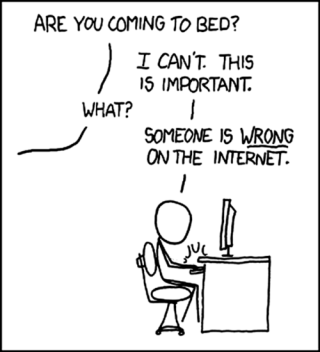

We do try to check the evidence behind some of the important claims made in public. But, as this cartoon suggests, nobody can do everything.

So our broader goal is to encourage others to check public claims themselves.

Question – but don’t dismiss

Unfortunately this allows public and other figures, by accident or on purpose, to mislead us all without consequences.

People have busy lives, so it’s often easier – for a journalist, a researcher, a public servant or a businesswoman – to take a claim made in public at face value. Over the years, we have seen this done by our allies in the media, by the courts and by the public at large.

Unfortunately this allows public and other figures, by accident or on purpose, to mislead us all without consequences.

But it’s important not to give in to cynicism when fighting misinformation. Yes, some politicians, media houses, businesses and others do seek to mislead, but that doesn’t mean they all do. And if a public figure misleads on one issue, that doesn’t mean they mislead on all issues.

We hope to encourage open-minded scepticism, instead of cynicism. Whether you are a judge or a journalist, a businesswoman or a health worker, it’s best to question – not dismiss – a claim until there’s reliable, verifiable evidence to back it up.

What to ask

To produce our reports we use our own experience as researchers and journalists, with help and advice from specialist experts in a range of fields. We’ve used the same approach to draw up some tips and advice on how to fact-check, starting with the key questions.

Where is the evidence? Is the evidence verifiable? And is the evidence sound?

How was the information gathered? When? By whom? What biases should the journalist, judge or businesswoman look out for in how the information is collected and reported?

These are all questions we examine on our How we fact check page.

Where to look

We’ve also found it’s important to know where to look.

This is why we continue to build up a library of guides and factsheets for you to use as sources of reliable data on key questions.

And our Info Finder offers a carefully selected collection of reliable facts and useful sources of data on a wide range of topics for Africa, Kenya, Nigeria and South Africa.

Africa Check is not and cannot, of course, be responsible for the accuracy of the data in all these sources. They are third parties, and we cannot and will not vouch for their accuracy on every issue. Besides, judgements about the accuracy or inaccuracy of data are rarely simple – there are always shades of grey.

We hope these sources are useful, but we also hope that as you start checking the claims people make, you maintain your open-minded scepticism and assess the accuracy of the data from these sources as well.

Handy tipsheets

TIPSHEET #1: False info on WhatsApp

Just because it’s online doesn’t mean it’s true! Ask yourself (and others) these questions before forwarding a message.

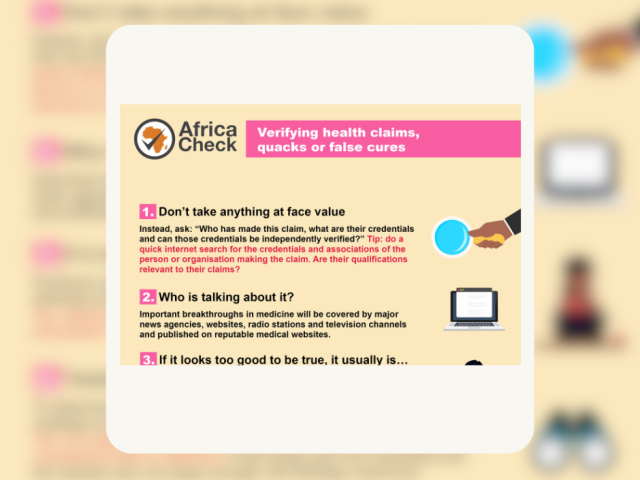

TIPSHEET #2: Fake health claims

Use this handy guide to help you sift out the real from the dodge when it comes to your health.

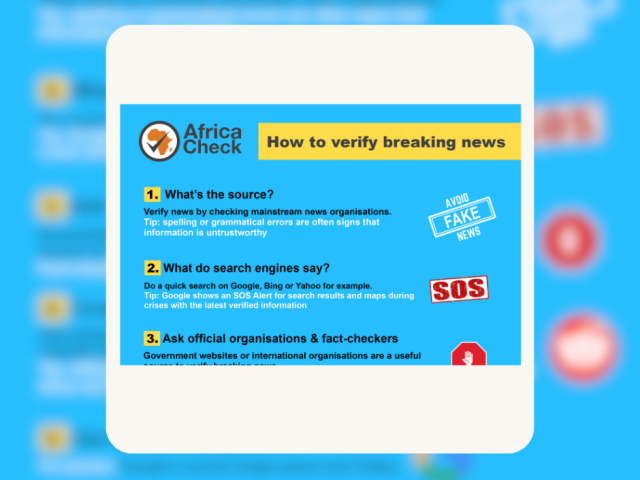

TIPSHEET #3: Verifying breaking news

How can you check if the information you receive is credible? Follow these simple steps to verify breaking news.

How-to videos

How to avoid falling for false news

False news can lead to poor health decisions, hardening of stereotypes, creating social divisions and damaging the public’s trust in the media. Plus, these stories get shared a lot and attract traffic to the dodgy website. Here are our four top tips to spotting a false news story.

How to verify images and videos

They say “seeing is believing” but in the age of false news and false information, we need to be more and more careful about trusting our eyes. So how can you tell if a video or image is true or false? Here are a few simple steps to verifying videos or images.

Misinformation shared on WhatsApp

If it’s a forwarded message from mom on WhatsApp, it must be true. Right? (SPOILER: NO) Help break the cycle of misinformation by asking these 5 questions before forwarding anything on WhatsApp.

Jobs scams - red flags

Fake bank jobs, fake supermarket jobs, fake government jobs… it seems social media is awash with fake job ads. How can you tell the difference between a real job ad and a scam? Watch the video for red flags to look out for.

Quack cures (and how to avoid them)

Medical advice and health tips are popping up everywhere - from WhatsApp to Facebook and Twitter. But many of these claims aren’t supported by science, and some can prove fatal. Don’t let bad information put your health and life at risk. Here are a few red flags to look out for.

How false information spreads

Ever wonder how false information, often referred to as "fake news", spreads so easily and how you can play a role in stopping it? We cover false information, including mis- and disinformation, how it spreads as well as the role bias plays.